Poll of the Week

Should internet users be required to verify themselves using a government-issued ID?

US lawmakers in the past have floated the idea of having internet users verify their ages with ID and/or a consent form from their parents to use a service, like social media. Is that something you would be in the favor of?

Programming

Data Science

Finance

Futurism

Gaming

Hackernoon

Life Hacking

Management

307 reads

trending #5

1,025 reads

100+ reads

Business

Society

Media

Machine Learning

Cybersecurity

Web 3

Product Management

Science

Startups

Remote Work

Tech Companies

Tech Stories

Writing

Cloud

Other stories published today

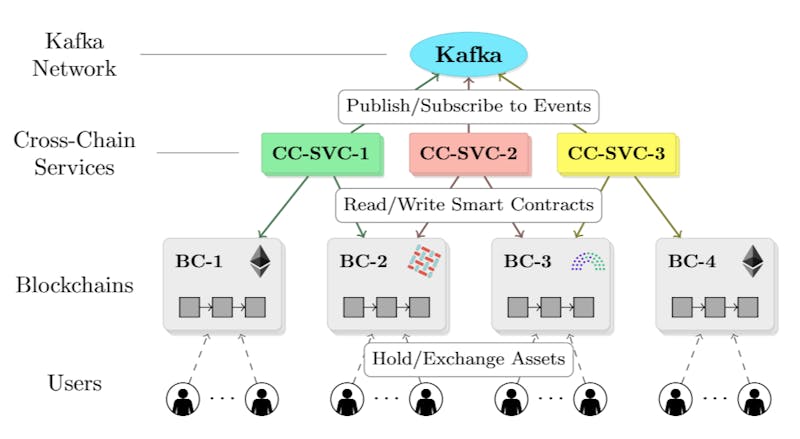

How We Evaluated CGAAL: The Experiments That We Ran

Aiding in the focused exploration of potential solutions.

A Tool Overview of CGAAL: A Distributed On-The-Fly ATL Model Checker

Aiding in the focused exploration of potential solutions.

How CGAAL Model-Checks: A Deeper Insight

Aiding in the focused exploration of potential solutions.